Climate Mitigation and the Price of CCUS

By Claudia Nyon | Edited by Abigael Eminza

This four-part series explores the opportunities, issues, and costs of Carbon Capture, Utilisation and Storage (CCUS), and examines CCUS, with a particular focus on Malaysia’s newly enacted CCUS Act 2025 (Malaymail, 2025).

CCUS has emerged as one of the most debated tools in the global decarbonisation toolkit, straddling the line between necessity and controversy. Initially rooted in the 1920s with natural gas purification and expanded in the 1970s through enhanced oil recovery (EOR), CCUS has since evolved into a proposed solution for hard-to-abate sectors, such as cement and steel. Its relevance has grown in the wake of the Paris Agreement, with more than 30 major projects announced globally since 2020 and countries like Malaysia enacting dedicated legislation such as the CCUS Act 2025 to spur adoption. Yet, despite decades of technical deployment, CCUS costs have remained stubbornly high and resistant to the steep declines seen in renewables, raising questions about scalability and economic efficiency. This introduction sets the stage for examining CCUS’s history, economics, policy drivers, and its contested role in achieving net-zero.

What is CCUS?

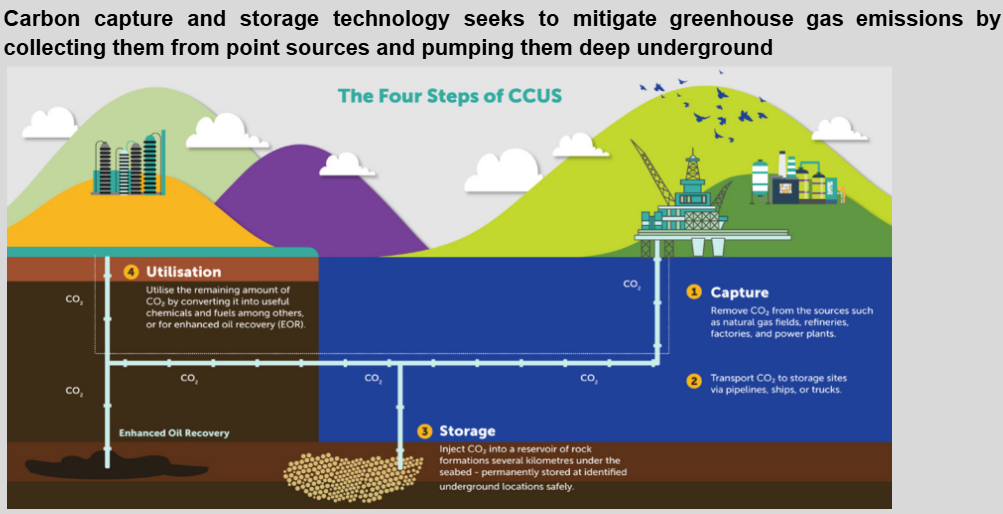

Carbon Capture and Storage (CCS) involves capturing carbon dioxide from large point sources, such as power plants, and securely storing it underground to prevent its release into the atmosphere. Carbon Capture, Utilisation, and Storage (CCUS) extends this concept by repurposing captured CO2 for industrial applications.

History of Carbon Capture and Its Utilisation in Enhanced Oil Recovery

1920s: Early carbon capture emerged with natural gas purification, which required separating carbon dioxide from gas streams.

1970s: Captured CO2 began being injected into oil fields for Enhanced Oil Recovery (EOR), a practice that continues to this day.

Today: CCUS remains widely used in EOR to unlock trapped oil reserves, demonstrating one of the earliest and most sustained applications of carbon capture technology.

The practice of capturing carbon dioxide from gas streams traces back to fossil fuel extraction. While oil and natural gas often occur together in the same reservoir, early fossil fuel development largely overlooked natural gas due to the lack of adequate pipeline infrastructure (Energy Information Administration Office of Oil and Gas, 2006).

By the early 1920s, with improvements in pipeline technology, the demand for natural gas increased, prompting the development of techniques to remove carbon dioxide, known as ‘purification’.

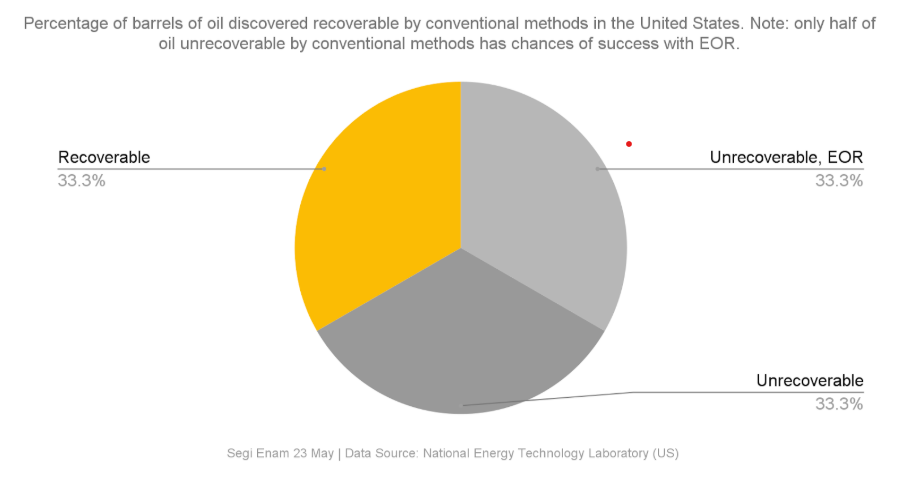

The first commercial CO2 capture and injection for EOR began in Texas in the 1970s (Cherepovitsyn, 2020). EOR remains the largest application of CCUS, as primary/secondary recovery leaves ~⅔ of oil untouched, thereby necessitating the injection of carbon into declining oil fields to unlock trapped reserves (National Energy Technology Laboratory).

Approximately 73% of the carbon successfully captured annually in the United States is utilized for EOR to unlock additional fossil fuel reserves (IEEFA, 2022).

Petronas has implemented EOR techniques to extract fossil fuels in Malaysia (New Straits Times, 2014).

CCUS and EOR: Statistics of Use

CCUS in Global Climate Change Mitigation

CCUS currently occupies a complex but increasingly central role in carbon policy and carbon economics.

The Paris Agreement of 2015 set ambitious emissions reduction targets, and the pathway to net-zero emissions by mid-century remains highly debated. This means the world must reduce today’s 50 Gt of total annual CO2-equivalent emissions to around net-zero by mid-century, with reductions of around 40% achieved by 2030 (Energy Transitions Commission, 2022).

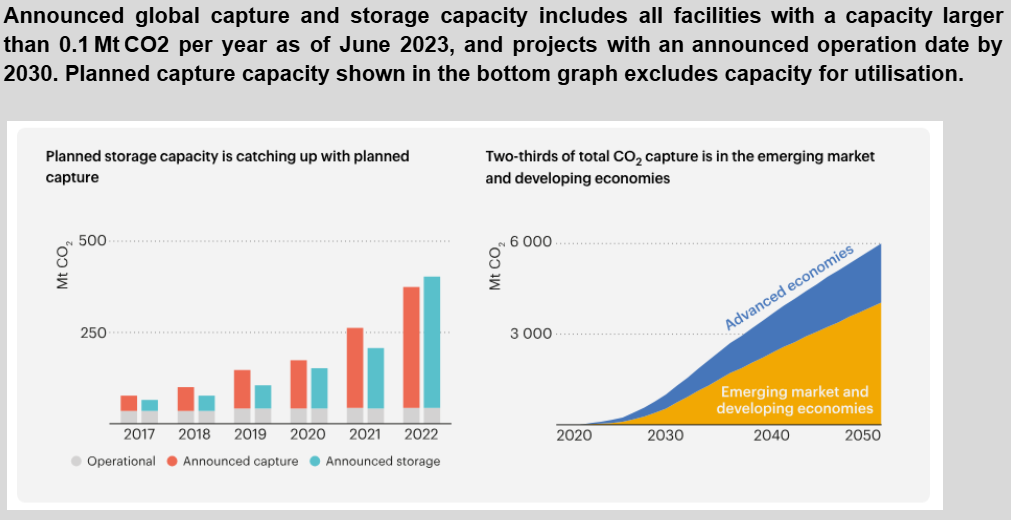

On the back of this, CCUS has positioned itself as a solution to decarbonise industries where alternatives are limited, such as the cement industry that produces 7% of global industrial greenhouse gas emissions (GHGs) (IEA, 2023), and to deliver carbon removals over the next few decades.

30+ commercial CCUS projects announced globally since 2020 (~$27 billion in near-final investments) (IEA, 2020).

Malaysia’s National Energy Transition Roadmap (NETR) projects CCUS mitigating 5% of energy-sector emissions by 2050 (NETR, 2023). (See Part 2 onwards for more)

The Price of CCUS

The Elusive Cost of Carbon Avoidance

One of the most persistent challenges in evaluating CCUS is the lack of a single, definitive cost estimate for preventing one metric ton of CO2 from entering the atmosphere, a metric known as the "avoidance cost."

The cost of capture ranges from:

$55/tCO2 for coal-fired power generation (OECD, 2011).

$64/tCO2 for dilute gas streams such as from cement plants (Jaffar et al., 2023).

$71/tCO2 for flue gas (Raksajati et al, 2013).

$80/tCO2 for open cycle gas-fired power plants (Brandl et al, 2021; Energy Transitions Commission, 2022).

Early-stage feasibility studies, which often form the basis of projections, tend to underestimate actual expenses by 15% to 30%, according to the OECD (2011), and as also shown in Table 1 at AACE (2005). These estimates can swing even wider when accounting for site-specific variables like infrastructure needs, regulatory hurdles, and regional labor costs.

For example, a Norwegian study found that adapting CCUS to an existing gas plant required 30% additional spending due to factors like specialized cooling systems and safety upgrades (OECD, 2011). This variability makes it difficult to compare technologies or assume cost advantages for one capture method over another.

The Trade-Off between Capture Rates and Costs

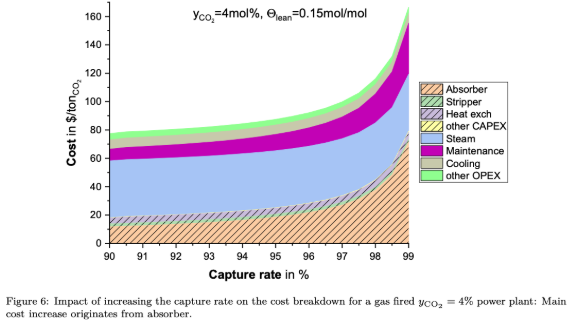

To meet net-zero targets, the CO2 capture rate should be as high as economically viable and as close to 100% as technologically possible. However, modifications in the capture plant design and operations to achieve a 100% capture rate would lead to increased costs.

To demonstrate, the flue gas from a gas-fired power plant contains approximately 4 mol% CO2. After capturing 99% of the CO2, the resulting CO2 composition is 400 ppm, which is lower than current atmospheric CO2 concentrations. The CO2 separation at the top of the absorber becomes as challenging as direct air capture (Brandl et al, 2021).

For gas-fired power plants, increasing the capture rate from 90% to 96% incurs an additional cost penalty of about 12%, taking the total cost from ~$80 to $90/tCO2. Increasing it to 99% could increase costs to $160/tCO2 (Brandl et al, 2021; Energy Transitions Commission, 2022).

Most projects, therefore, target a 90 percent capture rate as a pragmatic balance between performance and affordability. Yet even this benchmark is often missed as real-world examples fall short of achieving a high capture rate (>90%) due to cost-minimising decisions, engineering setbacks, or the early-stage nature of technological deployment (to be explored later in this series).

CCUS costs increase sharply as capture rates approach 100%. Below are the capture rates and costs in a gas-fired power plant

Stagnant Costs and Missed Learning Curves

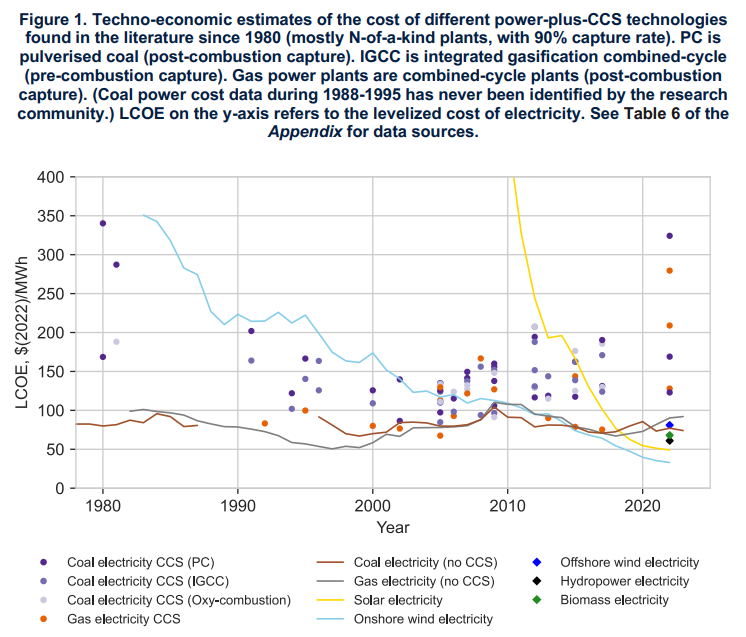

Unlike renewable energy technologies, which have seen dramatic cost reductions over decades, CCUS has defied expectations of similar progress.

A 2023 analysis noted that cost estimates for fossil power plants with CCUS have remained flat for over 40 years, suggesting a lack of systemic learning across the industry, from carbon capture to burial, despite decades of using all elements of the chain (Bacilieri et al., 2023).

Figure 1, below, shows estimates of the cost of fossil power with CCUS observed in the academic literature and industry reports over the last 40 years. Many of these reports stated that costs were expected to decline in the future due to technological learning. However, the plot makes clear that these expectations have so far not been realised. In fact, quite the opposite – as further information about the technology has been gained, cost estimates have generally risen (Bacilieri et al, 2023).

This stagnation is striking given that components like CO2 pipelines and injection wells have been used commercially since the 1970s. By contrast, technologies like solar panels and batteries typically reduce costs by 10 percent for every cumulative doubling of production capacity, a pattern CCUS has failed to replicate (Congressional Budget Office, 2023).

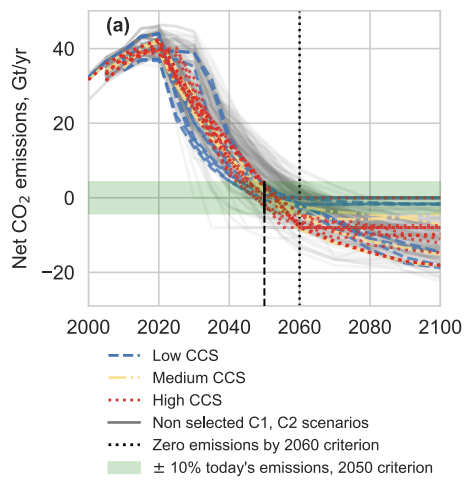

The High Price of Over-Reliance on CCUS

The high costs of CCUS have spurred debate about its optimal role in decarbonization. Recent modeling indicates that net-zero pathways relying heavily on CCUS could require $30 trillion more in spending than those prioritizing renewables and energy efficiency (Bacilieri et al., 2023).

This divergence arises because large-scale CCUS deployment delays the cost declines typically seen in alternatives like wind, solar, and green hydrogen. However, abandoning CCUS entirely isn’t economically viable either: certain industries, such as cement and steel, lack ready substitutes for fossil fuels, making limited CCUS deployment a cost-effective compromise (IPCC, 2023).

Net CO2 emissions over time for our low- (blue dashed lines), medium- (yellow dash-dotted lines), and high-CCUS (red dotted lines) scenarios, and all the other C1 and C2 scenarios (grey solid lines). The black dotted line marks the year 2060, which is the latest year we require selected scenarios to reach net zero. The green band and the vertical black solid and dashed line highlight the corridor of ±10% of today’s CO2 emissions, which we require our scenarios to fall into in 2050.

Foreseeable Economic and Policy Challenges

The divergence between high and low CCUS decarbonization pathways reveals a fundamental tension: economies prioritizing rapid scaling of renewables, electrolyzers, and energy storage achieve faster cost reductions through technological learning and economies of scale (Greig & Uden, 2021).

This dynamic creates a self-reinforcing cycle; accelerated deployment of alternatives further lowers their costs, reducing reliance on CCUS. By contrast, high-CCUS pathways face compounding expenses, as delayed investment in renewables perpetuates dependence on a technology with stubbornly stagnant costs.

Yet dismissing CCUS entirely ignores structural realities. Even critics acknowledge its inevitability for hard-to-abate sectors like cement (see Hughes, 2017), though its role remains hotly contested. Some fear that the technology now confronts a critical juncture, the so-called "valley of death" where technical viability clashes with insufficient private investment (Reiner, 2016). Market forces alone appear inadequate: CCUS ranks among the costliest near-term mitigation options (IPCC, 2022), with most projects requiring government backing to pencil out financially (Rempel et al., 2023). This dependency is exacerbated by the fossil fuel industry’s tepid commitment; oil and gas firms allocated less than 1% of 2020 capital expenditures to clean energy (World Energy Investment, 2021), raising questions about their willingness to drive meaningful CCUS scale-up without policy mandates.

Key findings: CCUS is neither a silver bullet nor universally accepted, but it’s unavoidable for net-zero, particularly in hard-to-abate industries. The debate now centers on how much CCUS is optimal, balancing cost, scalability, and emissions goals.

In this series:

Part 1: Climate Mitigation and the Price of CCUS

Part 2: Case Studies

Part 3: Malaysia’s Big Ambitions

Part 4: Issues for Successful Deployments

Reach us at khorreports[at]gmail.com